References

[1] Hohpe, G., Woolf, B. (2003). Enterprise Integration Patterns : Designing, Building, and Deploying Messaging Solutions. Addison-Wesley Professional. ISBN: 0321200683

[2] The Apache Software Foundation (24.11.2021) “Camel-K CLI (kamel)” [User Guide]. Retrieved from https://camel.apache.org/camel-k/next/cli/cli.html

[3] (24.11.2021) “OpenShift Integration” [GitHub Repository]. Retrieved from https://github.com/openshift-integration

[4] (24.11.2021) “Camel-K Basic Example” [GitHub Repository]. Retrieved from

https://github.com/openshift-integration/camel-k-example-basic

[5] The Apache Software Foundation (24.11.2021) “Languages” [User Guide]. Retrieved from https://camel.apache.org/camel-k/1.7.x/languages/languages.html

[6] The YAKS Community (24.11.2021) “YAKS is a platform to enable Cloud Native BDD testing on Kubernetes” [GitHub Repository]. Retrieved from https://github.com/citrusframework/yaks

[7] The YAKS Community (24.11.2021) “Operator install” [User Guide]. Retrieved from

https://citrusframework.org/yaks/reference/html/index.html#installation-operator

[8] The Apache Software Foundation (24.11.2021) “Autoscaling with Knative” [User Guide]. Retrieved from https://camel.apache.org/camel-k/next/scaling/integration.html#_autoscaling_with_knative

[9] (24.11.2021) “Camel-K Knative Example” [GitHub Repository]. Retrieved from https://github.com/openshift-integration/camel-k-example-knative

[10] The Apache Software Foundation (24.11.2021) “Creating a simple Kamelet” [User Guide]. Retrieved from

https://camel.apache.org/camel-k/1.6.x/kamelets/kamelets-dev.html#_creating_a_simple_kamelet

[11] Red Hat (21.11.2021 “ Camel K Supported Configurations“ [Article]. Retrieved from https://access.redhat.com/articles/6241991

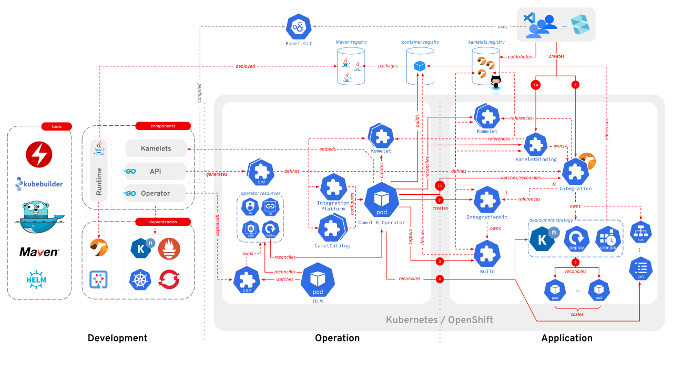

Fig1. The Apache Software Foundation (24.11.2021) “High Level Architecture”. https://camel.apache.org/camel-k/next/_images/architecture/camel-k-high-level.svg